Microvision (MVIS) Blog: Location-based Mixed Reality for Mobile Information Services

location based services

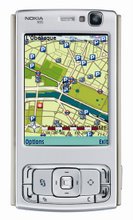

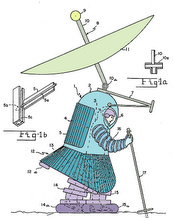

By Dr. Fotis LiarokapisWith advances in tracking technologies, new and challenging navigational applications have emerged. The availability of mobile devices with global positioning system receivers has stimulated the growth of location-based services, or LBS, such as location-sensitive directories, m-commerce and mapping applications.Today, standard GPS devices are becoming more and more affordable, and this promises to be the lead technology in use in LBS. Commercial applications such as in-car navigation systems are already established. However, for other potential applications like pedestrian urban navigation standard GPS devices are still deficient in providing high accuracy (ranges between 10 to 50 meters); coverage in urban areas (in-between high buildings or inside tunnels); and coverage in indoor environments.An assisted GPS application has been proposed that features orientation assistance provided by computer-vision techniques — detecting features included in the navigation route. These could be either user-predefined fiducials or a careful selection of real-world features (i.e. parts of buildings or whole buildings).With the combination of position and orientation it is possible to design “augmented reality” interfaces, which offer a richer cognitive experience, and which deliver orientation information infinitely and without the limitations of maps. However, to complement the environment in an AR setup, the continuous calculation of position and orientation information in real time is necessary. The key problem for vision-based AR is the difficulty obtaining sufficiently accurate position and orientation information in real time, which is crucial for stable registration between the real and virtual objects.The Development of Location Context tools for UMTS Mobile Information Services research project, or LOCUS, aims to significantly enhance the current map-based user-interface paradigm on a mobile device through the use of virtual reality and augmented reality techniques. Based on the principles of both AR and VR, a prototype mixed reality interface has been designed to be superimposed on location aware 3D models, 3D sound, images and textual information in both indoor and outdoor environments. As a case study, the campus of City University (London) has been modeled and preliminary tests of the system were performed using an outdoor navigation within the campus.Research IssuesA number of principal research issues, related to good calibration and registration techniques are being addressed. They include:Registration of the geographical information with real objects in real-time Use of mixed reality for spatial visualization at decision points Integration of visualized geographic information with other location-based services System Architecture and FunctionalityTo explore the potential of augmented reality in practice, we have designed a tangible mixed reality interface. An overview of the system architecture at the mobile device side includes a tracking sub-system, camera sub-system, graphical representation sub-system and user-Interface sub-system.The AR models acquire both the location and orientation information through a client API on the mobile device, which is sent to the server. The server will build and render the scene graph associated with the location selected and return it to the client for portrayal.Image-based ModellingThe first significant challenge is to model the areas of interest as accurately and realistically as possible. We are modeling the 3D scene around the user by means of image-based modeling techniques. A partner on the project, GeoInformation Group (Cambridge, U.K.) provided a unique and comprehensive set of building height/type and footprint data for London. Subsequently, the 3D models are extruded up from Mastermap building footprints to heights held in the GIG City heights database.Next, textures are manually captured using a digital camera with five megapixel resolution in order to create a more realistic virtual environment.Mixed Reality Pre-navigational ExperiencesThe stereotypical representation for self-localization and navigation is the map, which leads to the assumption that a digital map is also an appropriate environment representation on mobile devices. However, maps are designed for a detached overview (allocentric) rather than a self-referential personal view (egocentric), which poses new challenges. To test these issues we have developed an indoor pre-navigation visual interface to simulate the urban environment in which the user will ultimately navigate.In particular, a novel mixed reality interface, referred to as MRGIS, was implemented that uses computer-vision techniques to estimate the camera position and orientation, in six degrees-of-freedom, and to register geospatial multimedia information, in real time. Two procedures are being pursued for calibration: a technique involving a set of pre-calibrated marker cards, and an algorithm using image processing to identify fiducial points in the scene.In the context of LOCUS, geospatial information that we believe would make a significant improvement in the navigation process includes 3D maps, 2D maps (with other graphical overlays), 2D and 3D textual information (guidance, description and historical information) and spatial sound (narration).Another advantage of the system is that it provides the user with a tool to control the form of visualization and the level of interaction with geographical information, in both VR and AR environments. MRGIS can seamlessly operate using any number of input devices.The main virtue of the interface is that it can combine the multimedia information effectively without sacrificing the overall efficiency of the application. Thus, different pre-navigation scenarios can be supported to meet the requirements and demands of the users.Another advantage of MRGIS in terms of augmentation is that it supports VRML animation. Animation as a navigational aid is an unexplored area and we believe that it has a significant potential. To illustrate the capabilities of animation, a virtual representation of a human, an avatar, was created in the virtual scene. Next, a potential walkthrough around City University’s campus was designed. This makes the application look more ‘alive’ thus increasing the user’s feel of immersion in the environment.The mixed reality system has been demonstrated to a number of industrial partners and at scientific conferences with encouraging feedback. The avatar-based walkthrough received positive initial feedback, but further tests of its usefulness need to be performed.Augmented Reality Outdoor NavigationBased on the indoor, pre-navigational experiences, we have designed two prototype systems for outdoor navigation, particularly in urban environments. The work draws on the research of the Columbia Universities’ MARS project and shares some research with a Vienna University of Technology AR tourist guide application. MARS was developed to aid navigation and provide information to tourists in a city, while the latter addresses issues such as data management for very large geographic 3D models and efficient techniques for collaborative outdoor AR user interfaces.The end-user has two options for navigating in the real environment. The first requires some initial calibration of the environment prior to the navigation. Fiducial points (marker cards) must be positioned in a number of decision points, or “hotspots,” in the outdoor environment. As the end-user reaches a decision point (which may be the end of a road), a marker card assists navigating inside the designated area. A camera scans the environment for artificial marker cards, and when one is detected helpful multimedia information is superimposed onto the cards. (Figure 1).The alternative way to perform navigation is to detect natural features from the environment. In the case of the City campus, all buildings consist of regular sized shapes such as rectangular sized windows and door entrances. Using edge detection and template matching new fiducials can be trained and used instead of the marker cards. A screenshot demonstrating the implementation of this method is provided in Figure 2.The major disadvantage of the “markers” scenario is that it requires the preparation of the real environment and it provides a limited range of operation. On the other hand, markers provide very robust tracking. The “natural features” scenario has a large range of operation with no need to prepare the environment, and thus it can be more easily applied for urban navigation and wayfinding. However, calibration is much harder to achieve especially when dealing with buildings that look similar. We are planning to follow a hybrid approach in the future using a combination of GPS and natural feature scenarios.PDA-based NavigationOrientation will be provided by a digital compass. A hybrid approach can be then deployed utilizing a balance between both hardware (GPS and digital compass) and computer vision techniques to achieve the best registration results. In addition, we plan to develop new tools to characterize the spatio-temporal context defining the user's geographic information needs, and to build navigation and routing applications sensitive to this context.ConclusionsThis research, funded by the EPSRC, through Pinpoint Faraday Partnership, aims to enhance the UK research base in the emerging mobile information science. LBS are a crucial element in the strategy to develop new revenue streams alongside voice, and this research could significantly improve the usability and functionality of these services. The design issues must now be addressed through holistic task-based studies of the applications needed, and their usability at cognitive, ergonomic, informational and geographic levels.

posted by Ben # 11:35 AM

Comments: Post a Comment

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment